- dots & dashes

- Posts

- You Know Your Strengths. AI Knows Your Weaknesses.

You Know Your Strengths. AI Knows Your Weaknesses.

Tools to examine your own reporting, AI-generated op-eds, and Abraham Lincoln goes full influencer.

I read through a lot of news articles about AI. An alarming amount, some (me) would say. But sometimes an article stops me in my tracks.

One of them was a recent story from The Guardian: “The LA Times published an op-ed warning of AI’s dangers. It also published its AI tool’s reply”.

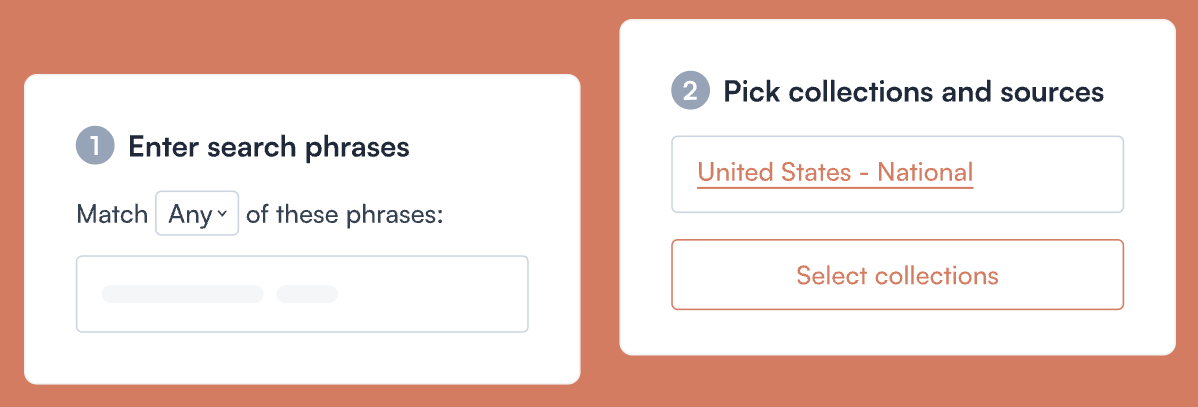

This isn’t the “Um…” section of the newsletter, so we’ll get into the ethics of this use of AI in a sec. To start, the story got me thinking about how AI can evaluate a reporter’s body of work to uncover recurring perspectives, biases, missing information, or even new opportunities for reporting. I mentioned how to do this at the article level in a previous post with tools like ChatPDF and Adobe Acrobat AI Assistant. What happens if you want to explore a body of work from the newsroom? There are several tools and technologies that you can get started with.

Google’s NotebookLM will analyze up to 50 sources on a free account and 300 on an enterprise account and provide insights and the ability to ask questions about the sources. Google Pinpoint takes searching archives a step further with multi-user options and the ability to analyze video and photos. Uploading is currently limited to 10 documents at a time. Humata.ai can also summarize documents, extract recurring themes, and is built for handling many large files, vs. ChatGPT, which is more suited for analyzing one or a few documents at a time. MediaCloud and its large database can identify how an issue is discussed across media sources and perform other detailed analyses.

“Ummm…” AI

Uses of AI in media that raise ethical questions

Let’s get back to the LA Times’ use of AI alongside op-eds. If you haven’t clicked through to the Guardian’s write-up, which I encourage you to do, here’s a quick synopsis:

The LA Times launched an AI integration powered by Perplexity that automatically generates responses underneath opinion pieces to show different views. An op-ed warning how AI could ruin trust in documentary footage is followed on the page by a 150-word AI-written rebuttal that defends AI in filmmaking. Editorial staff does not create or edit the content. AI-generated analysis also labels the article’s political leaning, in this case “Center Left.” The goal of deploying the tech was about breaking out of the “echo chamber,” according to the Times’ owner, Patrick Soon-Shiong.

This use of AI opened up a lot of questions for me. One of the lessons we learn as we develop our craft in journalism is that there are rarely just two sides to a story. But how do you decide which viewpoints to incorporate? And with the seemingly infinite intelligence of AI, the results themselves can be equally infinite. While highlighting varying viewpoints generated by AI makes sense on the surface, do we attribute them to what actual people think — or when are we treading into devil’s advocate territory?

AI is like an onion. So many layers.

As a further thought experiment, I pasted the AI response to the Times’ op-ed into ChatGPT and asked it to write a rebuttal to the rebuttal. Here’s what it came up with:

AI can expand access to historical storytelling, but accuracy still matters. When synthetic images look real, even small inaccuracies can blur the line between fact and fiction. And while it's true that archives carry historical biases, AI risks amplifying them in ways that feel authoritative. For AI to truly support inclusive storytelling, it needs human oversight and strong ethical standards to avoid replacing truth with well-produced guesswork.

O.M.G. AI

Mind-bending uses of AI not usually appropriate for journalism but are nonetheless thought-provoking

Speaking of alternative takes on history, AI-generated videos of history makers in the middle of making history have become very popular on social media. Think Abraham Lincoln posing at Gettysburg, Christopher Columbus sailing the ocean blue, or Thomas Edison taunting Tesla about his newest invention.

On the surface, these videos are a unique way to visualize the impact of notable people throughout time. The question is what is now a recurring one when it comes to AI: What if fictional content becomes facts for people because they haven’t been exposed to accurate information? Was the Taj Mahal actually over budget? Maybe… maybe not. But AI certainly has a perspective it can show you.

Enjoying dots & dashes? Share with a colleague or friend! Have feedback or questions? Email me at [email protected].